Decoding the Echoic Brain

Yiannis Cabolis

Auditory Localization, Immersive Soundscapes, and the TruSound Paradigm

This document is a modular and expandable executive briefing that explores how humans hear, process, localize, and emotionally engage with sound. It connects foundational neuroacoustic science to TruSound's paradigm of object-based 3D spatial audio and immersive storytelling.

Each section below will be developed individually and iteratively, allowing future updates without needing to modify the entire document.

I. The Echoic Brain as Immersive Interface

The human brain is not merely a biological receiver of sound, it is an interpretive interface that constructs spatial meaning, emotional resonance, and memory through auditory waves. At TruSound, we refer to this as the Echoic Brain: the part of our neurological structure responsible for decoding and re-encoding acoustic stimuli into experience.

Unlike vision, which is largely front-facing and linear, auditory perception is omnidirectional and temporal. This means our brain constructs an immersive understanding of our environment by constantly mapping sonic inputs in space and time. In essence, our brain acts as an echoic processor, analyzing characteristics like frequency, amplitude, phase, directionality, duration, and reverberation.

When we consider sound as immersive media, we are engaging the same mechanisms that kept our ancestors alive: orienting to the sound of a predator’s rustle, distinguishing a loved one’s voice in a crowd, or recalling a distant memory triggered by a melody. The Echoic Brain is responsible for these functions through a hierarchy of processing systems:

- The outer and middle ear provide mechanical collection and tuning

- The cochlea translates vibrational energy into neural impulses

- The auditory nerve transmits this signal

- The brainstem, midbrain, and auditory cortex interpret spatial, temporal, and emotional information

TruSound’s object-based approach aligns precisely with this pathway. Each sound object, defined in space and behavior, interacts with the listener’s Echoic Brain not as noise, but as presence. Unlike stereo or mono mixes, object-based sound enables dynamic auditory localization, emotional scaling, and cognitive anchoring.

By understanding how the Echoic Brain functions, we shift sound design from a passive background layer into a core architectural and narrative tool. In this paradigm, immersive sound is not just heard; it is inhabited.

II. The Cochlea as a Neuroacoustic Engine

The cochlea, often described as the ‘keyboard of the brain’, is a spiraled organ that acts as both transducer and tuner, translating air pressure variations into neural information. This transformation is not generic; it’s frequency specific. The cochlea organizes sound using a principle known as tonotopic mapping, where different frequencies stimulate different regions along its length.

The basal end responds to high frequencies. The apical end, to low frequencies. In between is a fluid continuum, literally, as the cochlea’s basilar membrane vibrates in response to incoming sound waves. These mechanical vibrations are then converted to electrochemical signals via hair cells, which trigger synaptic release and encode the information into the auditory nerve fibers.

This engine is extraordinary in its resolution. We can distinguish subtle frequency shifts, identify complex harmonic structures, and even perceive pitch when fundamental frequencies are missing, a phenomenon known as the missing fundamental effect.

TruSound emulates this biological architecture. Its rendering algorithms treat each sound object with spectral independence. No flattening. No frequency bleeding. Just clear, neurologically-resonant output. This mirrors how the cochlea parses a sonic environment, disentangling, encoding, and delivering coherent experience.

Moreover, TruSound’s ability to assign dynamic trajectories and behavioral properties to each sound mirrors how the cochlea receives continuous input, not as static tones, but as evolving waveforms. Thus, TruSound becomes a neuroacoustic partner, translating design intent into forms the body is evolutionarily equipped to understand.

In designing for the cochlea, we design not for the ear, but for the entire auditory perception chain. TruSound does this natively.

III. Transduction Pathway: From Fluid Motion to Neural Signal

The miracle of hearing begins with motion, air particles set in vibration by a source, and culminates in perception. The transduction process is the conversion of this mechanical energy into neural signals, a journey that defines the physiological basis of hearing.

It begins in the outer ear, which funnels sound into the ear canal to vibrate the tympanic membrane (eardrum). These vibrations move the three bones of the ossicular chain, malleus, incus, and stapes, amplifying the signal and transmitting it to the oval window of the cochlea.

Inside the cochlea, the fluid motion activates the basilar membrane, triggering inner hair cells that sit atop it. These hair cells perform the mechanical-to-electrical conversion, releasing neurotransmitters at their base. This stimulates the auditory nerve fibers connected to the spiral ganglion, sending electrical impulses toward the brain.

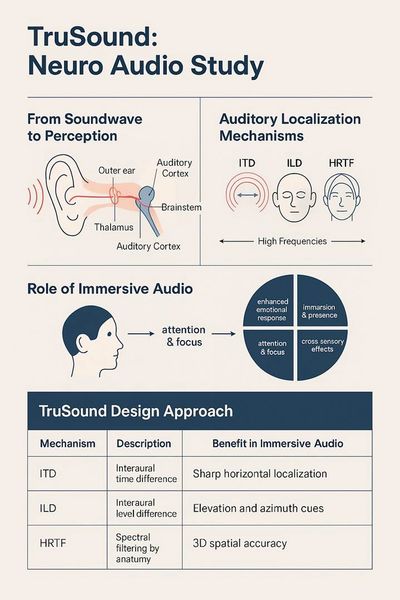

What’s remarkable is how phase locking, rate coding, and place coding are used by the auditory system to decode not just pitch, but also timing, loudness, and localization. The timing precision of neural spikes, within microseconds, supports interaural time difference (ITD) and interaural level difference (ILD) cues essential for spatial hearing.

TruSound’s real-time DSP emulates this responsiveness. It adapts object trajectories, dynamic range, and spatial cues in real-time to mimic how the brain prioritizes auditory motion and change. Its architecture accounts for temporal masking, spatial precedence, and frequency-dependent localization blur, ensuring the listener perceives immersion as natural and neurologically plausible.

Understanding the transduction process is more than anatomy, it’s a guide for design fidelity. TruSound treats the audience’s auditory pathway not as a passive channel, but as an active co-author of the experience.

IV. Localization & Presence: From Survival to Storytelling

The ability to localize sounds is foundational to both safety and storytelling. From detecting the direction of a snapping twig in the forest to sensing where a character’s voice is coming from in a narrative space, our binaural hearing system equips us with remarkable precision.

Two primary mechanisms support this:

- Interaural Time Difference (ITD): The time delay between a sound reaching one ear versus the other

- Interaural Level Difference (ILD): The sound pressure level difference between ears, influenced by the head shadow effect

Additionally, spectral shaping from the pinnae (outer ears) helps detect elevation and front/back orientation. These cues are analyzed in the brainstem (particularly the superior olivary complex) and inferior colliculus, which coordinate timing, intensity, and frequency data before relaying it to the auditory cortex.

Localization enables presence, the feeling of “being there.” Presence is a cognitive-emotional state where external stimuli and internal mental models align. Sound, particularly immersive sound, is a dominant trigger of presence due to its pervasiveness and emotional salience.

TruSound optimizes these mechanisms through:

- Real-time object positioning across azimuth, elevation, and depth

- Distance rendering with doppler cues and low-pass filtering

- Reflections, occlusion, and reverb modeling for environmental context

- Dynamic spatial scaling for different visitor positions or movement

This enables sound to move with the listener or toward them, creating active engagement, tension, or intimacy.

By embracing the localization circuits of the brain, TruSound transforms storytelling into auditory choreography. Presence is no longer assumed; it is engineered.

V. Emotional Engagement & Auditory Memory

Sound is not just a spatial phenomenon; it is deeply emotional and mnemonic. Our brain uses auditory input to tag events with emotion, anchor memories, and reinforce identity. This is due to the proximity and interconnection between the auditory cortex, the amygdala (emotion center), and the hippocampus (memory center).

A song can bring tears. A tone can induce fear. A voice can evoke a lost loved one. These emotional reactions are not metaphorical; they are real neurological phenomena that result from tight coupling between acoustic stimuli and emotional memory circuits.

This is where immersive audio becomes transformative. By carefully modulating tone, rhythm, spatiality, and dynamics, TruSound creates emotional arcs that are not just heard; they are felt:

- Harmonic congruence to evoke safety or tension

- Dynamic pacing to mirror emotional narrative beats

- Sonic motifs that return with altered emotional weight

- Directional shifts to simulate surprise, conflict, or intimacy

TruSound enables creators to orchestrate echoic emotionality, waves that bypass intellectual analysis and go straight to the limbic system. It doesn’t just soundtrack a moment; it creates it.

VI. Echoic Forced Perspectives: Steering Perception with Sound

Visual forced perspective alters what we see. Echoic forced perspective alters what we feel, anticipate, or misinterpret through auditory illusion. This concept is grounded in psychoacoustics, the science of how we perceive sound, rather than just how sound behaves physically.

TruSound’s 3D object steering capabilities allow for dynamic manipulation of:

- Perceived object distance through frequency attenuation and reverb length

- Perceived object size via harmonic content and stereo width

- Psychological weight by combining low frequencies and reverberant fields

- Direction of threat or allure through velocity cues, doppler effects, and occlusion

In immersive environments, be it museums, theaters, or walk-throughs, this technique:

- Directs guest attention without visual cues

- Stages narrative shifts across spaces

- Enhances accessibility through sound-as-navigation

- Aligns or distorts expectations for emotional effect

Echoic forced perspective is more than trickery; it’s dramaturgy through vibration. TruSound gives storytellers the tools to build spatial tension, acoustic misdirection, and multi-threaded perception that can’t be achieved visually alone.

VII. The Auditory Scene as a Story Architecture

We typically speak of “soundscapes,” but what TruSound enables is more than ambient layering; it is the crafting of auditory architecture. In this model, each object is not just a sound, but a scene element, like a wall, a doorway, a character.

By manipulating:

- Object size (amplitude and spatial footprint)

- Trajectory (how sound moves over time and space)

- Temporal relation (cue synchronization or counterpoint)

- Interaction logic (triggered responses, environmental adaptation)

TruSound allows experiences to become modular, reactive, and emotionally cohesive. Unlike conventional tracks, these experiences can evolve in response to audience behavior or storyline branching.

In themed entertainment, this opens the door to:

- Non-linear audio dramaturgy

- Multiple narrative POVs based on guest proximity or speed

- Audio that precedes, follows, or “speaks to” visitors as individuals

Sound becomes an architecture of guidance, directing attention, shifting boundaries, collapsing distance between imagination and reality.

VIII. System Architecture and Design Integration

TruSound’s power lies not only in its sound engine but in how it integrates into experience ecosystems. Its architecture is designed for:

- Real-time adaptive rendering via object metadata and environmental inputs

- Multi-channel output flexibility, from 2-channel spatial folds to >128 discrete outputs

- Compatible with show control ecosystems through standard sync protocols and time-aligned cueing from media servers, projection systems, lighting consoles and interactive platforms.

- Low-latency distribution across IP and optical networks for large venues

Key system benefits:

- Self-healing DSP that accounts for speaker failure, acoustic drift, or visitor density

- Dynamic mapping to match room geometry and experience intent

- Edge computing compatibility to offload rendering from centralized servers

Architects and AV consultants can design with TruSound as a modular, scalable layer, not requiring one-size-fits-all hardware, but adapting to context:

- Museums with overlapping exhibits and variable guest density

- Themed rides with precision timing and directional narrative

- Dome and planetarium shows with vertical and radial sound paths

With TruSound, audio is not an afterthought. It becomes part of the initial architectural intent, as fundamental as lighting or structural materials.

IX. Strategic Implications for Integrators and Creative Partners

For integrators, designers, content producers, and immersive architects, the TruSound paradigm offers more than one product; it offers a framework for experiential differentiation in an increasingly commoditized AV world. The science embedded in each of the previous sections is not abstract theory; it is a deployable advantage.

For AV Integrators:

- Modular Deployment: TruSound’s architecture allows for scalable deployment across form factors, from large-scale arenas and domes to boutique installations and traveling exhibits.

- Ease of Commissioning: Its adaptive self-healing DSP and spatial calibration routines reduce tuning cycles, enabling integrators to focus on storytelling intent rather than tech troubleshooting.

- Edge-Ready Architecture: With support for distributed rendering, TruSound can operate in edge-computing environments and integrate with spatial sensors, IoT beacons, and interactive triggers.

· Designed for deterministic timing and frame-accurate synchronization, supporting real-time operation with lighting desks, control interfaces, tracking systems, and media engines via flexible integration pathways such as network-based timing, hardware triggers, and synchronization bundles.

For Creative Producers and Storytellers:

- Scene-Based Object Control: Each sound can be treated as an actor within a scene, complete with trajectory, emotion curve, spatial signature, and interactive logic.

- Real-Time Spatial Direction: Artists and directors can audition, adjust, and choreograph sonic moments on the fly; live rendering empowers fast iteration.

- Narrative Continuity Tools: TruSound allows sound threads to follow characters, objects, or guests, maintaining narrative coherence even as space and interaction evolve.

For Architects and Environmental Designers:

- Sonic Zones as Design Layers: With TruSound, spatial audio becomes part of the initial architectural narrative not a late-stage add-on. Sound becomes structure.

- Invisible Integration: TruSound’s precision means fewer speakers can do more, reducing clutter, preserving aesthetics, and supporting LEED/sustainability goals.

- Material-Responsive Acoustics: Its ability to model and adapt to environmental changes allows architectural materials to shape, not inhibit, the sonic intent.

For Developers and Clients:

- Higher Engagement ROI: Immersive audio extends dwell time, emotional resonance, and return visitation in cultural, retail, and themed spaces.

- Future-Proof Investment: With a software-driven core, TruSound can scale with content upgrades, changing demographics, or new programming cycles without major hardware overhauls.

- Brand Voice Amplified: Every brand has a voice. TruSound gives it 3D presence, whispers that feel intimate, signals that feel urgent, themes that live spatially.

In short, TruSound is not a product to be specified. It is a collaborative platform to co-create with. For integrators and creatives ready to move beyond channels, zones, and mixes TruSound offers a leap into immersive orchestration, where sound is no longer an asset, but the experience itself.

X. Once upon a time … Now

We stand at the intersection of biology and design, where neuroscience and storytelling converge to reframe how we think about space, sound, and presence. The human brain, our Echoic Brain, is not simply a receiver of sound; it is an architect of worlds, a composer of memory, and a curator of emotion. TruSound is not simply a sound system; it is a platform for collaborating with this innate intelligence.

To create in sound is to shape experience. It is to sculpt time, extend meaning, and cast invisible architecture across physical space. In this architecture, listeners do not merely hear, they feel, orient, remember, and transform.

The TruSound Paradigm invites a new generation of creators, technologists, integrators, and architects to treat sound not as accompaniment, but as foundation. Not as a passive stream, but as an active dialogue between narrative and neurobiology, between place and perception.

Let us design for the whole listener, for the vestibular echoes in the skull, for the emotional frequencies in the chest, for the subconscious signatures in the brain. Let us build experiences that honor the profound intelligence of the auditory system and match it with technologies that elevate, not interrupt.

Let TruSound be the instrument. Let the Echoic Brain be the stage. Let immersion be not just felt but remembered.

This is not a trend. This is a transition.

From sound as signal… to sound as story. From hearing as input… to hearing as embodiment. From audio as function… to audio as architecture.

Copyright © 2024 TruSound - All Rights Reserved.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.